Niantic Spatial’s Large Geospatial Model

September 28th, 2025 | by Andreas Richter

(5 min read)

In our recent posts we touched the topic of Geospatial Artificial Intelligence (GeoAI) to get a brief understanding of what it is and where to get more information. One of the most promising players in this domain is Niantic, a former Google startup founded 2010 that got independent in 2015, which we may know as developer of location-based augmented reality (AR) games such as Ingress and Pokémon Go. These games hooked a lot of users and brought them in contact with geodata. But the company has greater ambitions and is aiming for more. After a restructuring and rebranding in Niantic Spatial the goal is to build the 3rd generation digital map with a fidelity level never achieved before. This new map shall be human and machine understandable to make artificial intelligence (AI) interact with the physical world. For that Niantic Spatial has introduced last year a platform for spatial computing to blend the digital world into reality on centimeter-level precision.

Geospatial Modeling

We already know large language models (LLM) as well as vision language models (VLM) and Niantic Spatial is now building a large geospatial model (LGM). It shall serve as a world model powered by large-scale machine learning that enables people and machines to understand and navigate the physical world.

Humans have a spatial understanding of the environment even if we haven’t seen it from all angles or in detail. We can reconstruct buildings based on our experience and intuition even if there are cultural differences. This shall be introduced as model for machine-understanding and is done by providing a spatially grounded and semantically rich understanding of real-world locations based on a proprietary database of over 30 billion posed images. This modeling can enhance spatial reasoning in large language models because it puts the complex reality into a simplified model.

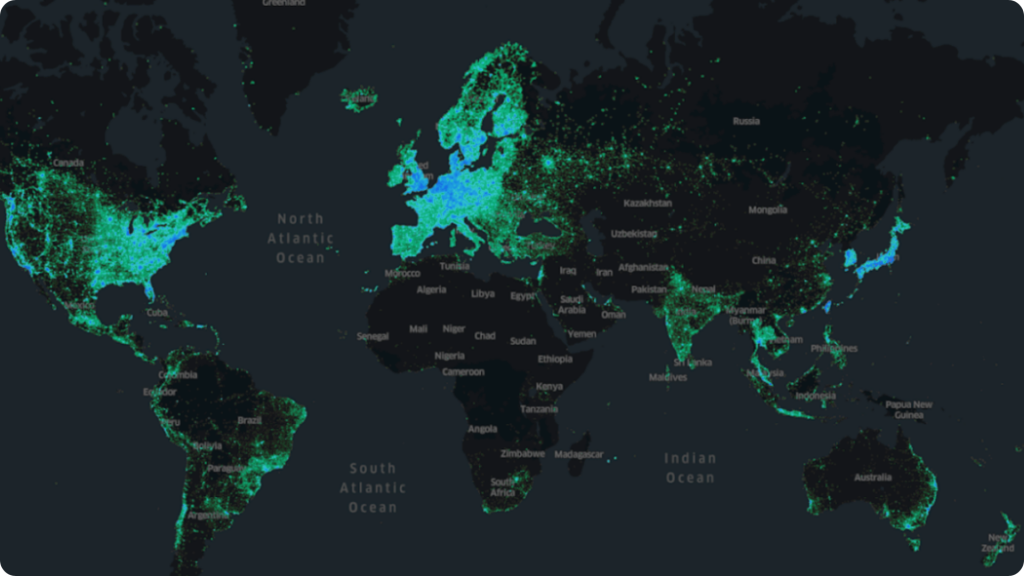

To support such kind of model Niantic has also introduced the Visual Positioning System (VPS), a cross-platform, engine-agnostic solution, independent of specific hardware vendors and walled-garden ecosystems for geolocation in six degrees of freedom (DOF) orientation and tracking across pre-mapped worldwide locations. The geolocations have a centimeter-level accuracy — the accuracy can be improved by incorporating “world poses” aka landmarks to located images from areas which are currently not fully covered by VPS. Currently one million locations are already live, enabled by ten million scans. All of them are pre-mapped from pedestrian perspective. These multiple scans are necessary because each point in the environment needs to be observed from different perspectives, angles, and light conditions so the model can be robust to changes. These scans can be easily achieved if a huge and vivid user base is available. Niantic spent in-game rewards in Pokémon Go and Ingress for submitting augmented reality scans of so-called Wayspots. 17 million Wayspots are now in Niantic’s database and they select those that are in areas where their local player community is and therefore prioritize Wayspots for collecting more scans. Fore sure for enterprise applications it is possible to create and manage access-controlled VPS locations, which are not shared with third-parties.

The outcome is two-fold:

- One map allows to position yourself in the world that’s captured by the user scans; but it’s geodata and not a visualization. This represents the data.

- The other map allows to “see” the world, which means visualizing the geometry, the texture, and what’s in front of the camera. This is for visualizing the data.

With the Visual Positioning System Niantic Spatial has trained more than 50 million neural networks, with more than 150 trillion parameters, enabling operation in over a million locations. In Niantic’s vision for a large geospatial model, each of these local networks would contribute to a global large model. Large language models shall evolve from “words” via “images” (vision language models) towards 3d assets (geospatial models). At the end all these stages get merged and transfer knowledge to new locations, even if those are observed only partially. Like human intuition the LGM extrapolates locally by interpolating globally. Additionally, specific rules of symmetry or generic layouts and appearances depending on geographic regions get applied to be prepared to understand and represent location assets.

Data Input

To get such models running a huge amount of training data is necessary. Niantic is covering that with the location-based augmented reality gaming community, providing user-contributed scans for pedestrian-level detail. But this community is not uniformly distributed and it’s activity is varying. Thus, additional data sources are of interest, so that the LGM will rely on data ingestion from LiDAR and 3d scanning for digital twins, arial imagery for land use and infrastructure, internet of things (IoT) sensor networks for real-time environmental monitoring as we know it from smart city models, behavioral data of involved actors and last but not least operational business data to tie spatial context to witnessed resp. measured outcomes. All these different data layers get pre-processed using artificial intelligence to identify patterns and relationships to put every data point into context to each other.

Purpose

What can we do with such kind of models? Compared to general purpose tools which are (mis-)used for more or less everything, specialized models have a more specific field of application. Large geospatial models should support forecasting infrastructure needs before they arise, model traffic or crowd flows in a realistic and extrapolatable way for urban planing or predict environmental changes. To make these analytic results usable following the two-kinds-of-a-map approach from above, all these data have to get visualized in individual layers for intuitive decision-making as well as provide API access to integrate the insights into third-party enterprise systems. This enables also general purpose tools to benefit from specialized expert systems for general questions and tasks.

Conclusion

For every problem there is a solution and if we deal with geodata there should be also a spatial intelligence tool or implementation. This principle applies to “AI domain” as well and the location-based augmented reality application company Niantic has a very good foundation for starting a promising enterprise to build a large geospatial model (LGM). It should bridge the gap between the human understanding of spatial representation and relationships of things to the capability of machines of pattern recognition and recreation. To build good AI models it is necessary to have clean and correct training data, especially if we deal with more complex topics compared to text creation.

LGM can enable to make digital twins of smart cities more versatile (e.g., with respect to correctness of predictions) and support the creation of such models by completing data based on knowledge if some of the data is only partially available or support by fusing new data sources into the model.

As always there can be risks in creating such models as we already have seen misuse of general purpose tools for malicious activities. Because geodata can turn into personal related data (if you have enough of them to identify pattern and derive patterns based on behavior — and machines are very good at this) there is also the potential that LGM can be used for the downside of the society.