The Meaning of Live of a Digital Twin

December 27th, 2023 | by Andreas Richter

(7 min read)

Data is the fuel of our modern industry 4.0. Digital Twins are the solution for developing and operating more complex systems and systems of systems. But what is a Digital Twin? We mentioned that term already in our mission posting and now it’s time to dig into this buzzword as we have already done for Smart Cities.

Theory

In general a digital twin is a data representation of the reality. The scope is always limited but not restricted regarding the physical size or complexity of the asset of the reality. The concept of Digital Twin was established in 2010 with the upcoming trend of the Internet of Things. There, ever smaller systems were connected to create sensor networks to operate more complex systems. In comparison to that the digital twin was used to represent something as a model to run optimizations regarding design and usage. The aerospace domain was the first one to establish the concept because conducting real-world tests of systems in their designated environment are more expensive than in other domains.

In addition to the pure representation of the reality a Digital Twin can also include processes and services to represent the usage and behavior of a system. The purpose is to simulate, for example, production and usage of an asset to:

- test production methods as well as intended functionality and impact of the usage.

- monitor the siblings deployed in the reality to ensure smooth operation.

- maintain the siblings deployed in the reality to avoid wear-outs causing crucial damages.

- optimize the production process (and its planning), operations and maintenance.

The concept of the digital twin implies not only to analyze the past by analyzing collected historical data but to apply pattern recognition and, therefore, enable prediction of the future by constant update of the digital representation.

Thus, the concept may on the one hand be applied to a production process (design, operate, maintain, optimize the production of assets) and on the other hand also to the lifecycle of products (operate, maintain optimize assets). A production facility may also be seen as an asset that makes use of a Digital Twin.

In the production domain the assets are created digitally first to setup production later-on. In the geodata domain various stakeholders are starting to build digital representations of real existing asset (roads, rails, buildings, factories, districts and even cities, etc.) to conduct their tasks afterwards. Nevertheless, since quite some time in infrastructure planning and, from personal experience of one of us GEONATIVES, in the area of training, Digital Twins were built before the real track was available so that, for example, train drivers could be trained for the operation in their future environment before it became operable.

Geodata is the fuel for urban planning. For sure, having geodata to operate specific tasks of a city isn’t a new idea. Road operators have been using (geo)databases to manage their assets for decades. For example, with this information additional services such as routing for heavy goods transport can be added. Using sensors such as traffic cameras to measure flow rates and traffic density enables the management of the demand. From this, the idea to combine more and more puzzle stones follows easily.

This was the starting point for the Smart City concept. Traffic isn’t an isolated use case; it is closely connected to more needs of the involved stakeholders: To optimize traffic flow in cities it is also helpful to provide information about supply infrastructure and availability of parking capacity to the individual traffic participants. Providing short-term information about traffic situations can help avoid accidents and congestions.

But Smart City is not only about traffic. Also civil planning, urban green, environmental effects such as efficiency for solar energy harvesting, pollution and air exchange as well as supply and disposal infrastructure are integrated parts of the planning. It started with the Building Information Modeling (BIM) that has the same goals as the Digital Twin concept but was established way earlier in the 1980s (nevertheless it became popular only after 2000). It is a cross-functional concept for designing and decision-making as well as managing a physical object by having a digital representation. BIM combines different domains necessary for having a functional building such as construction, supply and disposal infrastructure, communication, transport and logistics. It can be applied to a single asset but these assets need not only be buildings; they can be whole complexes of buildings.

With climate change and the awareness of limited resources the idea was born to understand buildings also as sources of materials. Instead of just taking down the construction and disposing of the demolition material a building is seen as a source of material for new constructions. For that the cadaster of the building needs to get more level of details than it was necessary for modeling the construction itself and its infrastructure so far. Each component gets information about how often it was used, where it was used, which material was used for manufacturing even down to which alloy was used for coating. With that in mind an accurate representation of such buildings can get up to 600 levels of details! The Madaster is such kind of trailblazer in the domain of building information modeling. With the help of all used materials it can calculate the complete environmental impact of the building through the whole life cycle including future reuse and recycling.

But back to the theory. Different scopes of a Digital Twin can be defined:

- Pure representation of the reality. Some would not call this a digital twin.

- Data integration (sort of upload) to update the representation of the reality sibling. The pure representation can always be adapted to the changes in reality. Such updates can be constantly provided by sensors or as bulk by renewing whole data sets.

- Outgoing data (sort of download) to control/operate the reality sibling and, therefore, it is more than just a download of data. Third-party applications can access the data via interfaces to process them for further activities.

- Interfacing with humans such as augmented reality to provide the digital representation as a service. For that the data of the Digital Twin may need to be transformed into different representations, which are more easily understood by humans.

The idea of having digital representations of the reality became possible because more and more information is available in digital form, and computing of large data sets has become ever cheaper. You don’t even have to own computing infrastructure any more because renting them became possible, too.

Interfaces allow shared access to the same data without having the need to create duplicates of data sets. Often downloading and transforming data for processing it individually were cause for glitches and misalignments when combining the different parts from different stakeholders to build the final product. Information had to be converted to other data formats, which were not capable to represent it in the same level of detail or granularity. With centrally accessible Digital Twins, every stakeholder is working on the same data, no misinterpretation or temporal differences are possible.

Ideally, standardized (and open) interfaces are used, which can be implemented in various tools. They enable new stakeholders to get access to the data and also to contribute as well. They enable also double-checking of the data by independent third parties. The data sets get traceable regarding their origin and updates. Additionally, linkage/references of data sets as well as parts of the data can provide more insight e.g., about the correctness of the data, because comparison gets easier.

Practice

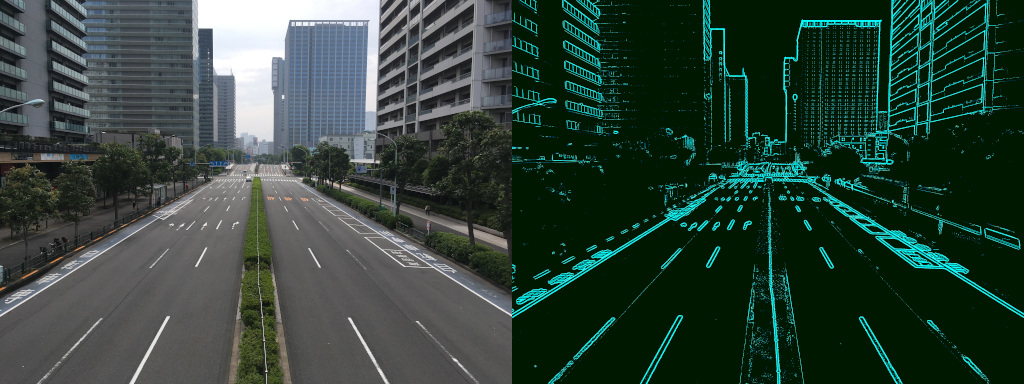

As mentioned previously sensor upstream capability can provide updates to the Digital Twin. Smart Cities utilize various sensors to update the representation of cities’ infrastructure as we have elaborated on in our feature how to get cities smart. And because we are also active in the domain of mobility it is obvious to connect both topics. Automated or Autonomous Driving Systems (ADS) can also be sensors scanning the city infrastructure or providing data about traffic flow or weather conditions. They can act as richly equipped probes floating around in the service area. In turn the ADS could use the Digital Twin of the city for localization and global as well as micro routing.

Because of the complexity of the ADS it will have a Digital Twin of its own for operation as it is already done in aerospace or railroad domain to maintain the expensive assets. Additionally it is important to reproduce and trace down decisions of the ADS in case of incidents and, therefore, a digital representation is useful to re-simulate specific situations to prove correctness of sense, plan, act of the ADS.

In the end the overall goal of a product’s Digital Twin – and in this case let’s assume a city is a product, too – is to perform predictive maintenance by forecasting wear-out and repair machines and infrastructure before critical damage can and will occur. These complex calculations can only be done with computing resources exceeding the capabilities of single assets. For cities this is no big deal because datacenters are already needed for operating the digital city. For ADS it is less easy. On the one hand they have enough computing power to process huge amounts of data but on the other hand there is not enough storage for all the historical data. Additionally, it is helpful to compare similar data to identify patterns revealing potential wear-outs, thus huge amounts of data have to be uploaded into datacenters.

One even more interesting feature could be the forecasting of behavior of traffic participants (or events in general). Observed behavior of single vehicles can be determined and an intention may be calculated. The intended actions of all traffic participants can be compared and optimized and suggestions provided. For autonomous driving it could be even more helpful to compensate missing information e.g., due to blind spots of the ADS with observation of the infrastructure. To carry it to the max the infrastructure could take over the complete control of the system by getting pre-processed sensor data but at least very low communication latency and large bandwidth will be required. It can be questioned if it is cheaper to set up such kind of infrastructure or if the ADS will get powerful and capable enough to operate on their own (as it was the goal from the very beginning). The potential coverage that can be achieved by infrastructure will definitely play a key role in this thought.

Conclusion

Multiple Digital Twins (of the ADS) will operate with the Digital Twin of the traffic system of a Smart City, which is, again, a Digital Twin. This is truly a system of systems and in the end a simulation of everything… and this feature ends how it started: with excessively using buzzwords. After all, Digital Twin (as well as Smart City) is often used as label to make state-of-the-art looking more fancy and new.