Expert Talk with Brad Templeton: No standard answer!

September 21st, 2022 | by GEONATIVES

(4 min read)

How would you feel if you were chasing for the holy grail and then someone came around the corner and told you that it’s not holy at all? This is more or less what happened to us GEONATIVES in a very intense 30-minute talk with Brad Templeton, a multi-talented software developer, author, speaker and self-driving car consultant at the end of August 2022.

But first things first. We had the pleasure to introduce our blog and the GEONATIVES initiative to Brad and chat with him about geo- and mobility data in general, mapping and… standardization. It took a short while to make clear that we are German and crazy enough not to pursue any economic aims with our blog but within a few minutes we were at our core subjects.

Mapping in all its flavors is a big subject in the US and there is a big gap between publicly financed and held data, private data and private data that are made publicly available. The bottom line concerning the first kind of data is “don’t trust public data”. This is not so much about a consumer not being able to trust the government but about commercial entities betting their success on public data (e.g., regarding accuracy and freshness).

From the very beginning of our blog, we have been postulating that “measure once, use many times” might be the most efficient way of mapping the world and navigating through it with various mobility solutions. And having these data in the hand of public bodies would provide a means to spend taxpayers’ money wisely.

But the world is not that simple. Data and good data are two different things. Why, for example do OEMs purchase parts from established Tier1s instead of buying them from cheaper options that might be available on the market? It’s the quality, availability, and, coming with it, the liability.

In applications like robo taxis, you wouldn’t want to bet your customers’ lives on the accuracy of the data in your system unless you were 100% sure that these data meet your quality standards and have passed a long list of tests (just think of the couple billion miles an autonomous vehicle is supposed to have managed without any fatal accident in the virtual domain before being considered deployable to the real world).

As we learned, tech companies have not been keen on collecting and processing their own mapping data from the very beginning. Only after having imported data from all over the world, conditioning them and trying to make them match, they decided to deploy their own sensor-equipped fleets and gather their own, trusted ground truth based on only one data source tailored to their own use case.

This should sound rather familiar to many of us who ever had to rely on customer-furnished data within the frame of, for example, a simulation project. How many hours have been spent on conditioning these data, making them match etc. just to find out in the end that accuracy was below expectations and yet more data had to be collected?

So, it’s no wonder that instead of sourcing data from somewhere else, deep-pocketed tech companies create their own data lakes and keep them up to date by adding data collected during regular operation of their mobility devices or sensors. Mobileye, for example, is doing this in their Road Experience Management project.

Top-tier tech companies have created tool chains that do not only enable the efficient processing of the data but also the necessary quality assurance. This gives them an edge over intermediate level companies, which have to rely on buying their data from other sources. In some cases, new companies specializing in creating maps from crowd-sourced data have come into existence like, just to name on, DeepMap (now part of NVIDIA). The only other solution, though, is not to use map data at all. But, so far, only Tesla is claiming to get along without them.

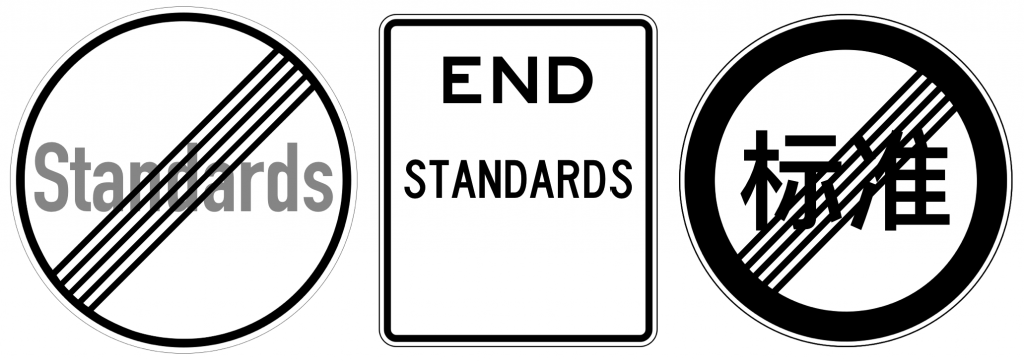

Now, there seem to be a lot of data available, and everyone seems to be doing the same thing again and again. Wouldn’t standardization of data formats and accompanying software make it a lot easier to combine these vast data pools and extract yet more value from them?

The obvious answer, we thought, would be a clear YES. But, as we learned, a NO might be even more obvious for the parties involved. Why this?

As Brad clearly stated his opinion, standards in terms of hardware are great but standards concerning software and data formats are over-rated. As the tech companies have shown, it is easier and faster for them to go their own way, gain critical mass and, thus market share. Some projects just put a higher reward on speed than on efficiency. And provided your pockets (or the ones of your investors) are deep enough, being among the first in the market just pays off.

Innovation – at least in your silo – still seems to gain speed, too. Why spend all this time in harmonization meetings with each party following its own agenda and agreeing on a standard that is, in the best case, the smallest common denominator? Standardization project are super-slow compared to current development cycles in the IT domain. This often also results in washy definitions release after years of review cycles…

This, for sure, leaves some room for thought for us naïve standardization evangelists. And we clearly see the points Brad has been making. But isn’t it, ultimately, the same world we all have to live in and do so in a co-operative way? If we don’t speak the same language, communication will be hard and error prone. Therefore, standards might sometimes pave the way a bit slower but Rome wasn’t built in a day, too – and it still exists!

Thanks, Brad, for talking to us!