Have we Already had Enough?

November 29th, 2022 | by Andreas Richter

(9 min read)

When the automotive domain is talking about automated driving then often the question arises “How good is good enough?” This question is about the capability of the automated driving system compared to an average human driver. Additionally, there is also the question about how much testing is needed to guarantee that the system is good enough. But what about the systems we are using for testing? How good do they have to be? Let’s collect some (philosophical) questions!

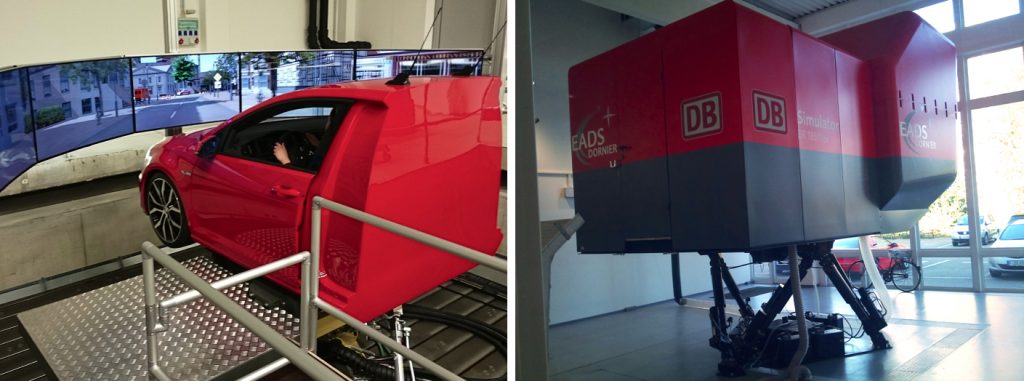

Before automated driving became popular it was all about driver assistance and fine-tuning of the car’s setup and appearance. For that, human-in-the-loop simulation was the tool of choice. Such simulation could also be used to understand a driver’s behavior and to train a driver. Thus, the “interface” to the human was essential.

Visualization: See what is

Rendering the environment and displaying it to the human in the loop is the most important interface. The test person has to immerse into the driving task to deliver realistic behavior. What kind of rendering do we need? Most of us remember that we got sucked in by old computer games. Compared to nowadays’ games the graphics are poles apart. For some studies it was enough do have just abstract blocks moving around on very simplified roads. If the human understands the situation – meaning that the scene is familiar to the test person – we get what we are looking for: realistic behavior.

Nevertheless, the visualization in modern driving simulators is more elaborate than having only moving blocks; but often it still doesn’t look realistic: The roads are still simplified in geometry, textures are repeating, and a lot of stuff in a real road environment is still missing (for German environments, especially, the large number of parked cars). Also, the general environment can have different levels of detail: textured walls replace accurately modeled 3d buildings, roads are flat and not bumpy (or do not have any crossfall) and all the trees are looking similar.

When looking into the railway domain some issues are less complex. Because of the railborne traveling mode you may come up with the idea to record a video sequence of the desired track and make it drivable because than you see the real thing. And, indeed, this is often done for training purposes and sometimes looks creepy if the frame rate does not match the driving speed of the train. In good old gaming days, you had to upgrade your graphics board if the rendering judders or the video recording needed a higher frame rate. Having a more open world situation or the need of showing various scenarios such video recordings aren’t the solution of choice.

But again: Is it necessary to have a lot of and realistic details in the visualization? Psychologists who are in charge of planning such virtual test tracks for human factors studies often say: “No.” The more detailed answer is: If there is so much detail in the scenes it gets more complicated to investigate the real trigger for a specific action of the test person. The studies are necessary to learn, which reason really caused the reaction of the human and when. Therefore, visualization in driving simulations is reduced to the max. And again, the test person is already able to immerse into the driving task and deliver realistic driving behavior.

Parenthesis: Driving behavior

Why do we know, that the test persons are showing the same driving behavior as in reality? Naturalistic driving studies are the source of this knowledge. Millions of recorded day-by-day driving tasks were analyzed with respect to which situations occurred and how people behaved. Also studies with more pre-defined driving were conducted to learn more about the driving behavior; these can be compared to similar test runs in a simulator.

So far, so good, concerning the rendering. What about the hardware interface? Does size matter?

Driving simulators come in various sizes (often depending on the deepness of the pockets of the operator). The smallest ones are just a screen and a steering wheel controller. Screen size can increase, also the number of screens to extend the field of view. Continuing that the screen can grow to 270° or even 360° field of view to cover the peripheral visual field, additionally floor projection can help if we are talking about classic simulators. Virtual Reality using headsets (head mounted displays) can deliver a huge field of view but are a different story because of the unusual setup for the test person and the drawback of not being able to see were the steering wheel currently is. See-through might help but is less realistic than having a huge screen around your (virtual) vehicle.

Additionally, stereoscopic projection could be applied, too, but this only helps for foreground elements such as the vehicle interior as well as close objects such as stop lines or objects on adjacent lanes. Everything else farther away doesn’t get more realistic by using stereoscopic view. In contrary, the additional equipment such as special glasses that is necessary for stereoscopic experience can disturb the immersion.

Thinking back again when computer games riveted us, it was enough to just have a screen in front of you. Sometimes the driving tasks require peripheral vision so that you have to attach at least some more displays. If you want to read more about the ease of use and immersion of two different small simulation setups you can have a look into this old paper “Impact of Immersion and Realism in Driving Simulator Studies” from 2014.

Force feedback: Getting deeply moved

While playing a computer game and while being really immersed we sometimes follow with our body what is happening on the screen without having any other input. But during driving in reality we get more feedback, often by force.

Most simulators are built as fixed-base simulators; sometimes they get a small (bass) shaker extension for subtle haptic feedback. The next level is to integrate a motion system beneath the driver’s seat, beneath the front part of the vehicle or put the whole vehicle on a motion platform (e.g. on a hexapod). Also these motion platforms can differ regarding their workspace: Most of the motion simulators are fixed and have to simulate longer lasting forces (e.g., acceleration and centrifugal forces) by tilting. The most complex motion simulators are mounted on rail or sledge systems to create these forces by exactly reproducing the underlying motion. For fine-tuning of the vehicle dynamics such systems are helpful; in order to just understand how big the gap in the oncoming traffic has to be to do a turn maneuver it could be enough to do it in a fixed-base simulator.

For more on that topic you can have a look into the paper “Driver Behavior Comparison Between Static and Dynamic Simulation for Advanced Driving Maneuvers” that is comparing the performance of test persons doing a slalom task in both, a dynamic and fixed-base simulator.

Occupy more sense

The sound is also an interface to the human-in-the-loop. For good reasons, car manufactures are employing sound designers to make the car sound right. Thus, sound has impact on how we sense the driving situation. Again it depends on the research question that should be answered. If you what to fine-tune the vehicle dynamics, sound will help; for answering the turn-gap question, it will be not necessary.

If you want to read more about sound and vibration improvements in a simulator you can have a look into Swedish VTi report about “Improving the realism in the VTI driving simulators” from 2012.

What about olfactics displays? To be honest during our career in automotive domain and building and operating driving simulations we didn’t come across of any experiment taking this sense into account. It does not mean that this is not an important one – some luxury brands have their own scent for their cars (we do not refer to tree-shaped air freshener) – but we assume that hopefully this is not influencing the driving behavior (apart from getting dizzy).

Getting real

We talked about simulation but we are not finished, yet. A lot can be tested in a simulator but sometimes a crosscheck in reality will be necessary. In early stages of development, tests will take place on proving grounds, which also cannot recreate reality completely. They represent only fractions of reality: The road environment is reduced to the essential elements, the road layout is simplified and the buildings are Potemkin villages. The scenes are less crowded and critical traffic participants are balloon cars and cardboard characters. In the end it is a simulation come true but you already can learn from using it (see parenthesis above).

Simulation and real world tests are necessary to learn which reason really caused the reaction of the human and how the human interacts with the system. Therefore, simulation in driving simulators is reduced to the max. After presentation of new research results in this domain we often asked the authors if the chosen setup was appropriate enough to answer the research question. The answer was – of course – always: “Yes.” Asking for more details about this certainty, the stories were similar to what we have written in the beginning of this post: Simplified visualization and Input devices were already good enough to let the test person immerse in the driving task. It already has worked in previous studies, thus it will have worked for the current study as well. The real answer on this is: “Yes, because we are not operating anything that is more sophisticated or we do not have the resources to afford a more realistic setup.” Additionally, it should be taken into account that humans can adapt to the current situation. And if they are used to do the driving task on displays it is easier for them to immerse in this task with familiar equipment.

One more thing: One thing or sum of the parts

When talking about automated driving the human-in-the-loop isn’t the most critical element in the system any more. The vehicle has to take over the perception task completely. Thus, sensors are getting into focus. The human being has its neural network to anticipate scenes and react accordingly; sensors are just devices executing specific physical effects to gain knowledge for the self-driving system. The big question now is: Is our representation of reality in simulation as well as at the proving ground good enough to let the sensors return results as they would do in reality?

In simulation the visualization was originally created for human perception in the first place. Providing a proper image was sufficient. When radar systems for distance control got popular you could emulate the result of the sensor by just calculating the distance of the 3d objects in the virtual environment. At the proving grounds you already had to build your environment and moving objects in the way that the objects would reflect the radar beam properly.

Most of the current automated driving approaches make use of different sensors such as near- and far-field camera, long- and short-range radar as well as Lidars. They all react individually on the objects and the materials the objects are made of. It won’t be enough to model objects just as “one” thing with a texture (and fancy shader effects) on top; the object has to be compose of its parts: A building consists of concrete, wood and glass structures, a vehicle will have glass, plastic and metal elements… and more and more bicycles are made of carbon instead of aluminum (and sometimes still made of steel). The mixture will cause different results than an object made of only one material. How to bring this into the simulation is a different story.

Additionally also the processing of the perception has to be taken into account: Early camera-based systems e.g., for lane keeping assistance used computer graphic algorithm for feature extraction such as “find the white line on the dark surface”. The feature used contrast calculation. These contrasts can be found in both, real-world video recordings as well as in generated-computer images. Nowadays, more and more neural networks should solve the task by getting trained with (hopefully) huge amount of training data as input. But nobody really can tell what exactly the neural network is learning not even with supervised learning. The result is that a neural network trained with computer-generated images can have huge problems to deliver useful results with real-world images and vice versa. The generated images have to become almost realistic to be a proper input that can cover any kind of situation that the system should be able to understand. Otherwise you have to find such situations in reality…

Conclusion

At the end, reality is the real thing, which is overwhelmingly complex and multifarious. Reducing it to be able to stuff it into computable simulations will be inevitable even if computing power is growing. The models can get more complex but still remain simplified models. To find the right balance will continue to be the challenge. And we assume that the answer: “Yes, we had the necessary level of immersion for our task.” will also continue to be the answer because the interviewee wasn’t able to afford something more realistic.