Expert Interview: Alex Goldberg of MathWorks on map data, data formats and virtual worlds

April 20th, 2022 | by GEONATIVES

(9 min read)

Maps, data formats and virtual worlds are key ingredients to enabling mobility solutions. Alex Goldberg of MathWorks is one of THE experts when it comes to data themselves and to the tooling around the whole data processing pipeline. We are glad and we highly appreciate that, despite an extremely busy schedule, he took the time for an interview with us GEONATIVES. Enjoy it!

Alex, thank you very much for taking the time to answer a few questions for the blog of our GEONATIVES Think Tank. You are definitely one of THE experts for creating and maintaining HD maps. Would you mind introducing yourself to our readers?

Thank you for inviting me! My name is Alex Goldberg, and I’m a principal engineer at MathWorks. I’ve been working with virtual road and environment models for 15 years.

In 2006 I joined PixelActive, where we built a city-modeling tool for rapid creation of simple road networks. We were acquired by HERE Maps, and I spent six years as a tools lead on scaled HD Map creation and validation for autonomous driving.

I left to co-found VectorZero in 2017, where we set out to build the best-in-class HD road editor: RoadRunner. This was our third experience building a virtual road model from scratch. We knew from HERE that the world’s roads are full of complicated edge cases that require a sophisticated road model. Interoperability with common formats and tools was a key design decision, especially OpenDRIVE, Unity, and CARLA.

In 2020, we were acquired by MathWorks. We have since had the pleasure of expanding our efforts on RoadRunner, creating new features and add-on products to accelerate the pace of innovation in HD map construction and computation.

You are currently involved in importing, editing, and exporting map data for ADAS/AD simulation purposes. Please describe this process for our readers.

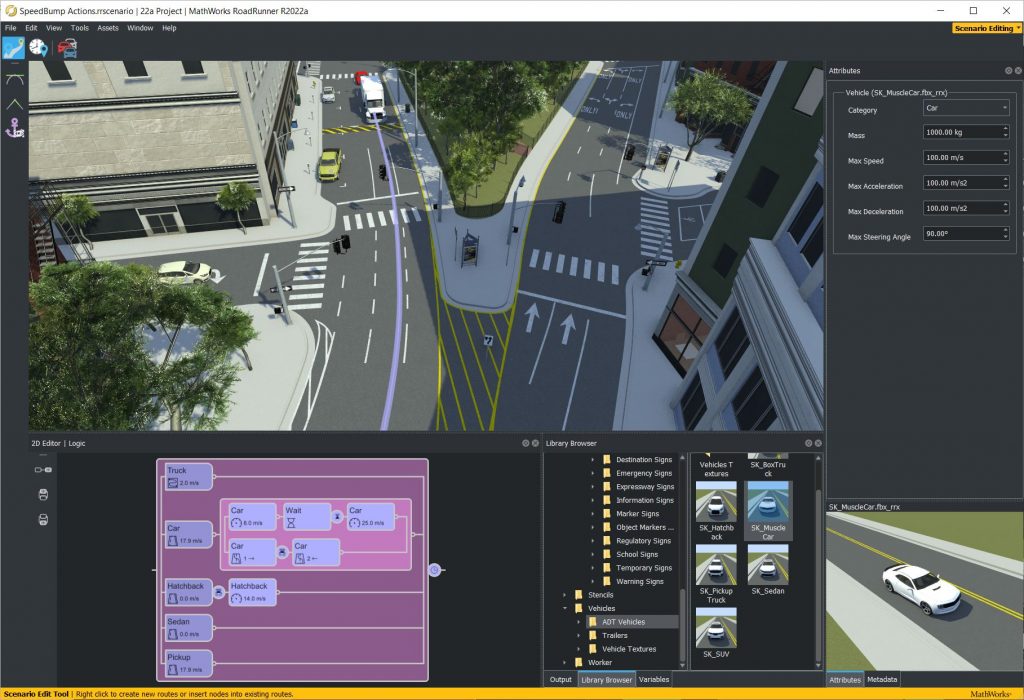

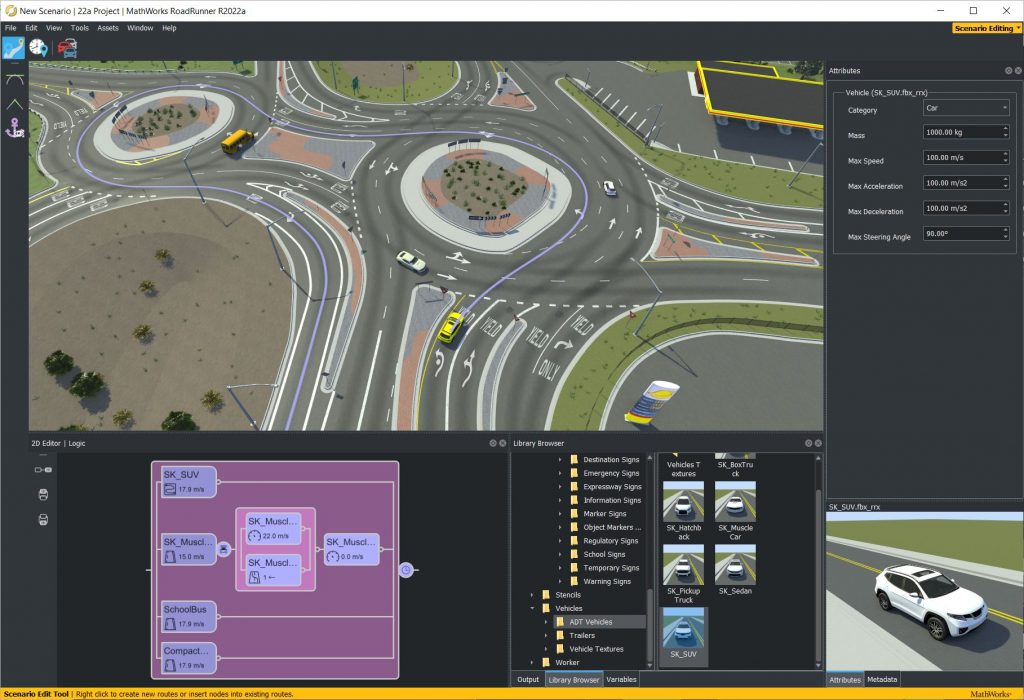

Our customers use RoadRunner to synthesize 3D scene models and semantic HD Map data, which is then exported to a range of formats and simulators for testing automated driving systems. These scenes could be real-world locations, such as an urban corridor with parking lots for testing autonomous taxis. They could also be imagined locations, such as a gauntlet of complex intersections with occluded signage for testing edge cases in planning algorithms.

This process can start with visual reference data, such as Lidar point clouds, aerial imagery, or GIS vector files. This data is automatically transformed into a consistent projection and visualized. A user can then leverage RoadRunner’s suite of powerful and intuitive editing tools to digitize 3D roads, signs, traffic signals, barriers, and surrounding objects such as buildings and foliage.

The construction process can be accelerated by importing existing HD Map content from OpenDRIVE or (with our Scene Builder add-on) from HD Map vendors such as HERE and TomTom. The same editing tools can be used to enrich and extend the imported data. For example, they can add missing roads and parking lots, or modify the lane boundaries to align with more recent reference data.

This content is validated and seamlessly exported to a range of formats and simulators. This can be accomplished for a single scene using a few clicks, or for large batches of scenes using our programmatic API.

With your long history in creating tools for editing and maintaining map databases, you will for sure have optimized the internal representation of your data. Where do you see the biggest challenges in representing real-world situations in a data format?

One thing I’ve learned over the years is that real roads are complicated. Every simplifying assumption is sure to be proven wrong somewhere in the world. When evaluating a map data model, there are various design criteria to consider. A few criteria include:

- Expressive power: What can the model represent? Can it be expanded for additional feature types?

- Ease of construction: Is the model amenable to human authoring? How about automated construction (e.g. from sensor detections)? Can artificial content be easily synthesized (for test protocols, or to explore edge cases)? Can existing HD Map data be easily converted to the model?

- Ease of maintenance: Can authored content be enriched with more data? Can humans interpret and correct bad content (e.g. from automated algorithms)?

- Ease of consumption: How usable is the model for AD algorithms? Consider geometric representation, coverage (tiling, storage footprint), spatial projection, versioning, etc.

In practice, these criteria are often competing. A single model may not be the answer.

Do you see your internal data format as an alternative or superior to other published data formats? Are there (technical/logical) limitations that would prevent 3rd parties from using it?

Our data model is optimized for editing and interaction. Combined with RoadRunner’s algorithms, it enables rapid modeling and editing of detailed urban roadways. However, this power comes with the cost of computational complexity. For example, our model’s flexible topology allows an offramp to be introduced anywhere along a road (without ‘chopping’ the road). This is powerful for modeling, but challenging for routing algorithms.

For map data consumers (e.g. algorithms running in autonomous vehicles), simple topology and geometry is important. Ease of editing is not. For this reason, I think there is a need for at least two models: A flexible model for editing, and a simple HD Map model for publication. I think the greatest industry benefit would come from an alignment on the HD Map model.

ASAM OpenDRIVE is one of the key standards for maps in simulation applications. How does this format influence your work and where do you see its limits, especially in comparison to “production” formats?

OpenDRIVE import and export has been important since the inception of RoadRunner, so the format naturally continues to drive product requirements.

In terms of limits, let’s try evaluating the existing specification (OpenDRIVE 1.x) against the design criteria above:

- Expressive power:

- Pros: It can represent a wide range of road carriageways (groupings of contiguous lanes with varying lane widths) with continuous precision. It is also open to extension.

- Cons: Real-world junctions can be difficult to represent, as it is often tricky to find a perpendicular separation between junction and non-junction. Often, scene content authors must get quite ‘creative’ to represent a situation in OpenDRIVE.

- Ease of construction:

- Pros: Its parametric nature makes it useful for rapid synthesis and prototyping, especially for freeway situations. Intuitive editing tools can be created for OpenDRIVE (and similar) models.

- Cons: Converting sensor detections or even clean HD Map data to OpenDRIVE is very challenging. While possible in many situations, it is quite difficult to avoid accuracy loss in urban areas.

- Ease of maintenance:

- Pros: Independent geometry profiles (for elevation, lane widths, etc.) allow data to be added or modified added without impacting existing data.

- Cons: It can be difficult to make localized changes. For example: a change to the plan curve near the start of the road can have substantial impact on the end of the road. Modifying road shape also impacts object locations, which makes it difficult to maintain roads in isolation.

- Ease of consumption:

- Pros: Parametric representation enables rapid distance-based queries (e.g. number of lanes 50 meters ahead).

- Cons: It can be surprisingly tricky to correctly and efficiently derive 3D locations of road features. For example, deriving a polyline trace of a lane boundary to a given spatial precision could require analysis of a dozen independent geometry channels. Many operations depend on accurate computations of curve arc lengths, which doesn’t have a closed-form solution for some of the curve types. Small errors in length computations can accumulate to have large impact on long roads. There is no native support for tiling, and converting between geospatial projections is error-prone.

Some of the ‘cons’ will be addressed in OpenDRIVE 2.0. We’re excited to help support and evaluate OpenDRIVE as it evolves to meet the challenges of modeling reality. I particularly like the trend towards spatially-correlated layers (‘Area concept’), simplified geometric representations, and the introduction of junction borders. I’m also very encouraged by the work to standardize international sign representations.

A lot of software stacks, esp. for automated driving, builds upon proprietary map data formats. How often do you see similar specifications and ask yourself why everybody has to re-invent the wheel?

When you consider both public and proprietary map representations, there is unquestionably a high degree of duplication. This isn’t terribly surprising – companies have existing toolchains based on internal formats that have evolved over years. Often, these formats are optimized for different design criteria than standard formats (e.g. compatibility with proprietary pipelines and algorithms).

It’s easy to start a new road format, but it’s equally easy to underestimate the complexities of real roads and traffic rules. How many formats can represent a lane that’s bidirectional for buses every Tuesday?

Some duplication is healthy for innovation, but I think the industry should seek to align on certain fundamentals. For example: a standardized coding of international lane marking and traffic signal types.

Simulation may be performed within pure artificial worlds (i.e., without any real-world correlation). Taking your user base into account, where do you see most users – in the creation and application of artificial environments or in the modeling of replicas of real-world locations?

Many RoadRunner users are modeling real-world locations (particularly in areas near research sites). But there is also a sizeable portion who are modeling artificial environments, either based on standardized test scenarios or internal specifications.

I think over time we’ll see a shift towards artificial environments generated by programmatic variation. Standardized test scenarios are often based on simple/common situations with light variation (e.g. simple freeway section with a range of curvatures). But automated vehicles can already handle such cases. The challenges lie in the edge cases: blind intersections, faded markings, road debris, etc. Especially important are situations that include multiple edge cases at once. Millions of test cases are needed, and generating them all by hand is impractical.

Any data that has been surveyed is “old data” with decreasing confidence over time. What possibilities do you see for a continuous update of geodata, e.g. by vehicles equipped with all kinds of ADAS sensors, by remote sensing or by using sensor-equipped drones?

I believe that’s a fundamental requirement for any long-term production usage of HD Maps. Dedicated mapping vehicles are slow to schedule and costly to maintain, and could never keep up with the pace of changes to real roads. Human review of every change is untenable. At the same time, freshness is critical for functionality and safety. If HD Maps continue to be a requirement to enable automated driving, then updates must be continuous and highly automated.

Going one step further: Do you see a chance that geodata may also be completely crowd-sourced by using on-board sensors of regular vehicles only?

A major challenge here is the fact that the sensors currently required to create accurate HD Maps are too costly (and probably too unsightly) to install on consumer vehicles. It’s possible that fusion of a large volume of lower-quality detections could result in sufficient accuracy. But there are technical, security, and privacy considerations.

Before we get there, consumer vehicles could be used for detecting and reporting the presence of deviations between the map and reality. Vehicles with more capable sensors (e.g. attached to fleets of delivery trucks) could then be scheduled for higher-definition capture.

Reality is available to everyone (who wants to perceive it). Who should own the digital twin of reality?

Before talking about ownership, we must first define what ‘the’ digital twin really is. Even if we restrict the conversation to automated driving on public streets, I think we’ll find many disagreements in the industry on near-term and long-term requirements for a digital twin of reality. I have my doubts that self driving use cases alone can justify the massive effort of building and maintaining a fresh, accurate digital twin over the long run. So we must naturally extend the conversation to indoor, aerial, and mixed-mode use cases. While we’re at it, let’s go ahead and throw in location commerce, metaverse-type use cases, and anything else we cook up in the next few decades.

What sort of twin can handle these use cases? Imagine that we had a fresh and accurate point cloud of every surface in the world (an impossible prospect). Is that sufficient to enable an autonomous vehicle to safely and legally navigate a complex intersection? Can I use it to estimate how many eyes will see a new billboard? Or render in interactive framerates on a VR headset?

I don’t think the digital twin of reality is singular – neither in space, nor in time. I think the digital twin of the future is an ecosystem. An aggregation of raw data from all sorts of sensors (a Lidar scan from a mapping vehicle, imagery from a hobbyist’s drone, a motion sensor at a toll booth gate). Layers of registration and fusion algorithms. Data pipelines running detections and transformations. Application frameworks for labeling, editing, and visualization. Marketplaces for selling data and subscribing to derived products.

In short: the digital twin is more of a location platform than a data set. And who should own it? Well, who owns the internet?

Final question: With our initiative being roughly a year old, what topics would you like to see covered in a blog like ours?

Personally, I would be interested in seeing any content that helps enable data-driven decision making. Achieving ‘One’ digital twin will ultimately rely heavily on standardization efforts, and it will be important to measure success using data. For example: A survey of test scenarios defined by different government bodies, evaluation criteria and challenge cases for digital twin efforts, etc.

I’ve enjoyed your content thus far, and I’m looking forward to seeing more – thank you for starting this initiative!

Thanks a lot, Alex!

Recommended Reading

The following links are provided by GEONATIVES based on the content given in the interview.

- For OpenDRIVE 2.0, please refer to the ASAM Website

- For accurate point clouds of the world, refer to the TanDEM-X website